Prestack Data Enhancement toolkit

Prestack Data Enhancement toolkit

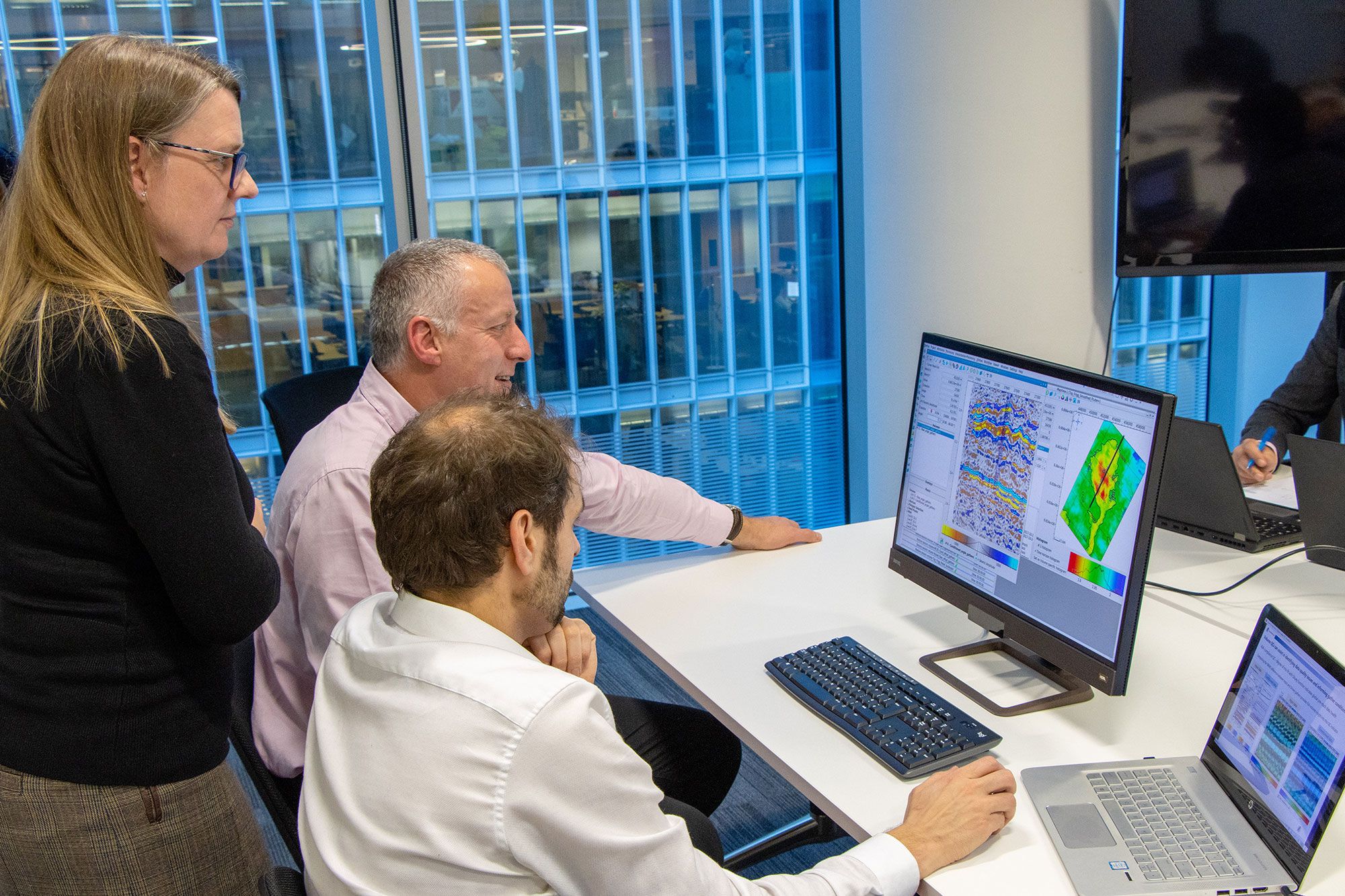

The Sharp Reflections PRO toolkit, powered by a high-performance computing (HPC) engine, equips

geophysicists working together in exploration and production teams to seamlessly quality control (QC),

calibrate and condition huge volumes of post-migration seismic data for targeted interpretation purposes.

The PRO processing tools operate on prestack gathers and on partial angle stacks, and let you quickly and

flexibly optimize the reliability of your full-survey seismic data. With HPC, there is no need to decimate data

or limit your working area. QC and processing workflows can be revisited quickly as fresh well information

comes in to ensure optimal data quality throughout the life of the reservoir.

Process, condition and QC full-fidelity prestack or poststack seismic data, and integrate with advanced interpretation tools, all on a single HPC-powered platform.

Working on a single software platform that integrates inversion, azimuthal or 4D time-lapse data, you have the power to run multiple conditioning iterations, and see for yourself the impact on your final results, in minutes or hours rather than days or weeks.

Extract and explore the amplitude on any event throughout the processing workflow, without compromise. Link stacks, full-fold gathers and map viewers, and explore all angles of new prospects. In development and producing fields, calibrate to new well data and remove noise on repeat seismic surveys to track fluid movement with greater precision.

Benefits

Fast visual (QC) and map-based health checks

Interactive processing previews that let you test and select filter parametyers more quickly

Robust coherent noise removal algorithms

Gather alignment tools to produce accurate AVA attributes and improve stack resolution

Workflow editor to automate and create consistent, repeatable processing work processes

Powered by the

PRO toolkit

Unlocking the value of multi-dimensional data

Generate complex processing workflows and automate execution

Make repetitive tasks easy and efficient. When exploring a huge dataset, you want to try different parameters, and “what if” scenarios. The workflow manager makes it easy to set up and repeat tasks.

Try, try again—until you are satisfied that you have the best results.

How does it work?

How does the PRO toolkit optimize the accessibility of your seismic data? Your industry colleagues asked for details; our experts answered. Join the dialogue…

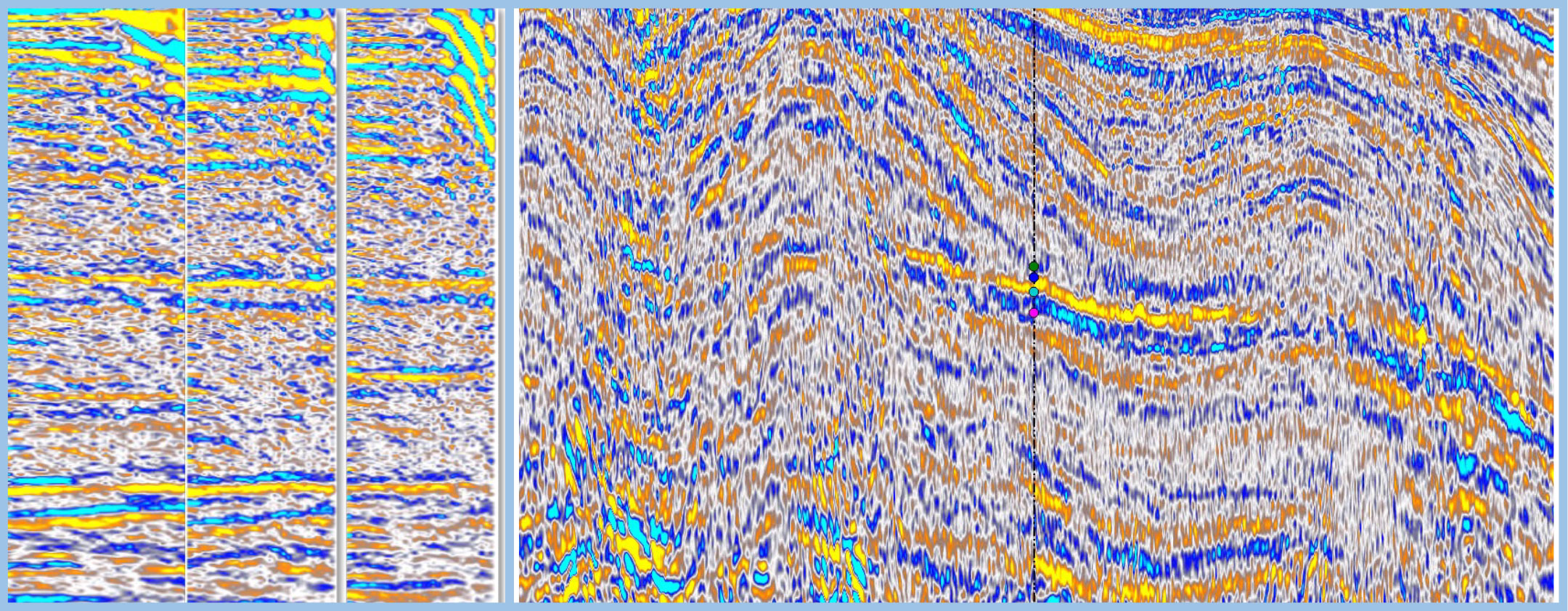

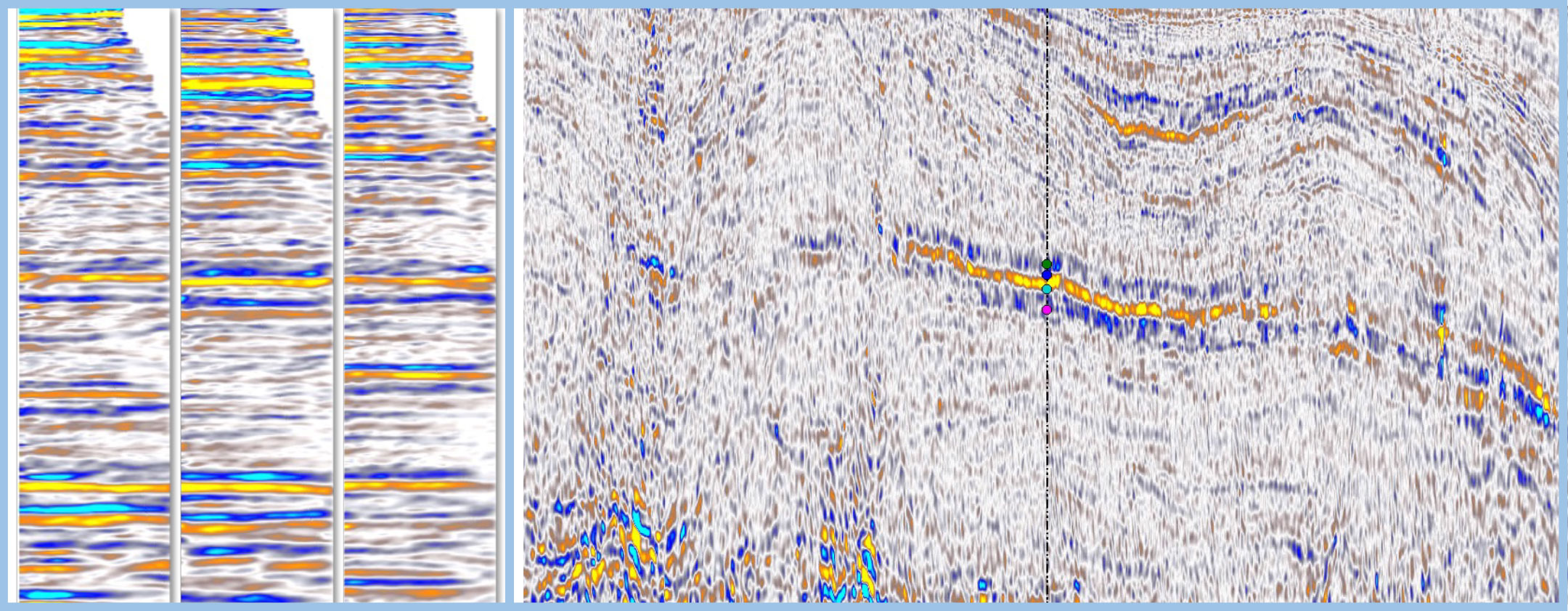

Conditioning the gathers has a huge impact on the final attribute EEI 45. The pictures show the offset gathers and the EEI 45o.before (slide right) and after (slide left) conditioning.

How do I improve the AVO fidelity of my data?

Your Subtitle Goes Here

The data enhancement toolkit includes a range of advanced tools and techniques for improving the quality, accuracy, completeness, and usefulness of data. Some common examples of advanced data enhancement tools include

- Demultiple

- Random noise reduction

- Gather flattening

- AVO-compliant scaling and wavelet harmonization across offset or angle

- Q compensation and spectral shaping

- Time-depth conversion

- Conversion of offset gathers to angle gathers and vice versa

How do I condition terabytes of data?

Your Subtitle Goes Here

Sharp Reflections software makes it easy to generate workflows in batches of unlimited volume size. It provides a powerful and intuitive tool for processing and analyzing large datasets. After interactively testing conditioning processes, our software provides a user-friendly interface and intuitive workflow generation tool that allows users to create and manage complex processing workflows.

In addition, the software includes built-in optimization features and scalable architecture, allowing it to handle large volumes of data efficiently and effectively.

Does the PRO toolkit truly allow me to work interactively?

Your Subtitle Goes Here

Parallel, in-memory architecture is particularly well-suited to interactive testing and QC processes. In-memory systems store data in RAM (random access memory), rather than on a disk or other slower storage media. By storing data in RAM, in-memory systems provide fast access to data, allowing for near-instantaneous processing and analysis of large datasets.

As a result, you are able to

- test volumes rather than linkes

- test and validate data quickly and efficiently to ensure its accuracy and completeness

- perform real-time analytics and other time-sensitive tasks

- rapidly create iterations and test new data models and algorithms.

Can I process only narrow azimuth datasets? How do I condition my WAZ datasets?

Your Subtitle Goes Here

All the algorithms have been extended to azimuthal (wide azimuth datasets), time-lapse data, onshore and offshore, providing you with a smooth experience.

The processing tools are adapted to handle directional data, including common offset vector and common offset/common azimuth data. These datasets may be used to map the orientation and properties of subsurface geological features, such as fractures and faults.

The processing toolkits are also adapted to multivintage datasets, giving access to extra features to ensure full time-lapse conditioning, which can then be used to monitor changes in subsurface properties, such as fluid pressure, temperature or seismic activity over time.

Can I process my data if I only have angle stacks or does your software work only with prestack gathers?

Your Subtitle Goes Here

Improving your QI or inversion attributes from angle stacks is also possible with the Sharp Reflections PRO toolkit. For example, noise reduction and stack alignment algorithms can be used to improve your final attribute quality. You can use the flexibility and the interactivity of the software to get the best of your input data whatever it is, from offset gathers to stacks.

How can I QC my dataset on the full area, and be sure that the data is optimal not only on my test line?

Your Subtitle Goes Here

“Health checks” are an important aspect of seismic data processing. They help to ensure that the data being analyzed is of sufficient quality and accuracy to support reliable interpretation and decision making. To assess the seismic data quality on the full area, health checks can be performed at various stages of the processing workflow.

Some key health checks that may be performed include the following.

- Noise and distortion analysis—to assess the level of noise in the seismic data, which can impact the accuracy and reliability of subsequent interpretations or QI studies.

- Data consistency and completeness—to assess the consistency and completeness of the seismic data, ensuring the AVO fidelity.

- Quality control of seismic attributes—to assess the quality of such attributes as amplitude, frequency and phase, to ensure that they are consistent and reliable across the full area.

Thanks to its in-memory architecture, Sharp Reflections software allows you to do interactive and extensive QCs. The QC maps can be linked to prestack data viewers to better assess the data quality and to better define the conditioning sequence.

How can I improve my data for better interpretation?

Your Subtitle Goes Here

The PRO toolkit can be used to get a better and a more reliable interpretation. It offers advanced noise reduction algorithms to attenuate random and coherent noises and help remove unwanted artifacts, resulting in higher quality seismic data.

The stack response can be enhanced by using time and spatially variant mutes, as well as weighted stack techniques to use only the data with the best S:N ratio.

How can I be sure that the data is fit for my QI or inversion study?

Your Subtitle Goes Here

The PRO toolkit streamlines the integration of the conditioning with QAI and inversion workflows. It allows you to assess in real time the effect of the conditioning on final products. The high-performance speed of Sharp Reflections software means you can assess the effect of individual processes on the attributes used for interpretation.

Key technical capabilities

Interactive quality controls (QC) and health checks based on amplitude fidelity to identify

- gather flatness

- amplitude variation with angle (AVA) compliance

- noise, both coherent and incoherent

- amplitude and phase integrity

Interactive processing previews that let you test and select fi lter parameters more quickly

Robust coherent noise removal algorithms including 3D dip fi lters, parabolic and linear Radon and Tau-P Decon

2D and 3D random noise attenuation

normal moveout (NMO) correction

Gather alignment tools, including residual moveout correction and time-variant trim statics, to produce accurate AVA attributes and improve stack resolution

Velocity editor using semblance spectrum

Wavelet shaping including Q compensation in amplitude and phase, spectral balancing and enhancement, match filtering to improve wavelet consistency

Workflow editor to automate and create consistent, repeatable processing work processes

Offset/angle conversion and partial angle stacking

Broad range of prestack seismic attributes, including intercept/gradient, colored acoustic impedance (AI) and extended elastic impedance (EEI), and numerous combinations

To learn from our experts how to do all this in a real project, sign up for this course:

Dive into the details

Sharp Reflections software equips users to explore complex, multidimensional data volumes interactively using five toolkits. Users move smoothly across the entire spectrum of workflows, from data processing and conditioning through to 4D time-lapse interpretation, all on one platform.

Quantitative Amplitude Interpretation

Inversion

Azimuthal

Improve illumination and understanding of complex geological structures.

4D Time-Lapse

All the data for the best decisions

Sharp Reflections turns huge datasets into assets instead of roadblocks. Our software helps clients explore their seismic data—every terabyte—to develop deeper, purer views of their reservoirs.

Sharp Reflections is the industry’s only software platform built on a powerful compute and display engine designed specifically for high-performance computing (HPC), on premises or in the cloud.

Our integrated platform enables users to start analyzing and interpreting seismic data as soon as processing begins. No information is wasted as users reduce uncertainty and fine tune their reservoir characterization to help achieve trustable exploration, drilling and production decisions.